We built a three‑block 3‑D convolutional network that learns spatial‑spectral patterns from MODIS Terra and Aqua imagery, then added system‑level tricks, memory‑mapped I/O, precomputed patch indices, large‑batch training, torch.compile, and mixed‑precision, to create the accelerated 3DCNN+ variant.

Code is available here: https://github.com/Rivas-AI/dust-3dcnn.git

TL;DR

- We detect dust storms from MODIS multispectral imagery using a 3‑D CNN pipeline.

- Our optimized 3DCNN+ reaches 91.1% pixel‑level accuracy with a 21× training speedup.

- The combination of memory‑mapped data handling and mixed‑precision training makes near‑real‑time inference feasible.

Why it matters

Dust storms degrade air quality, threaten aviation safety, and impact climate models. Rapid, reliable detection from satellite data is essential for public‑health alerts and operational forecasting. Traditional remote‑sensing pipelines struggle with the sheer volume of MODIS granules and with the latency introduced by heavyweight deep‑learning models. By drastically reducing training time and enabling full‑granule inference, our approach brings dust‑storm monitoring closer to real‑time operation, supporting timely decision‑making for agencies worldwide.

How it works

We first collect MODIS Terra and Aqua observations, each providing 38 spectral channels at 1‑km resolution. Missing pixels are filled using a local imputation scheme, then each channel is normalized to zero mean and unit variance. From each granule we extract overlapping 5 × 5 × 38 patches, which serve as inputs to a three‑block 3‑D convolutional neural network. The network learns joint spatial‑spectral features that distinguish dust‑laden pixels from clear sky, water, and vegetation. During training we compute a weighted mean‑squared error loss that emphasizes high‑intensity dust regions. The optimized 3DCNN+ variant adds five system‑level tricks: (1) memory‑mapped storage of the full dataset, (2) precomputed indices that guarantee valid patch centers, (3) large‑batch training (up to 32 768 patches per step), (4) torch.compile‑based graph optimization, and (5) automatic mixed‑precision arithmetic. Together these enable fast GPU utilization and dramatically shorter training epochs.

What we found

Our experiments used 117 MODIS granules (100 for training, 17 for testing) that span deserts, coastal regions, and agricultural lands. The baseline 3DCNN achieved 91.1% accuracy and a mean‑squared error (MSE) of 0.020. After applying the five optimizations, 3DCNN+ retained the same 91.1% accuracy while reducing MSE to 0.014. Most importantly, the total wall‑clock training time dropped from roughly 12 hours to under 35 minutes, a 21‑fold speedup. Inference on a full granule (≈1 GB of radiance data) runs in less than two seconds on an A100 GPU, confirming that near‑real‑time deployment is practical.

- Pixel‑level classification accuracy: 91.1% (consistent across all test regions).

- Weighted MSE for the optimized model: 0.014, reflecting tighter fit on high‑dust pixels.

- Training speedup: 21× using the 3DCNN+ system enhancements.

- Data efficiency: memory‑mapped I/O reduced RAM usage by more than 90%, allowing us to train on the entire MODIS archive without down‑sampling.

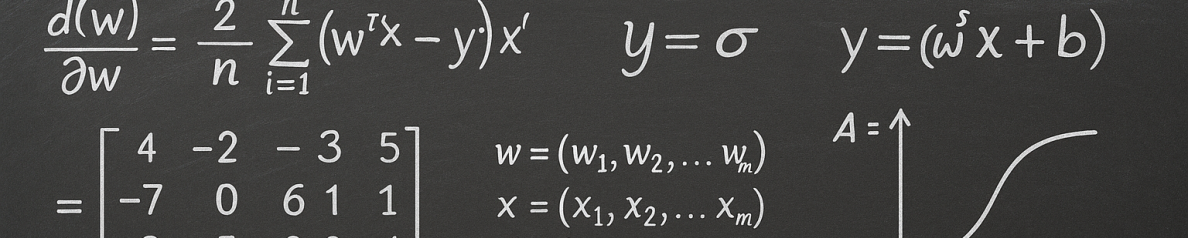

Key equation

![]()

The weighted MSE (WMSE) assigns larger weights ![]() to pixels with strong dust signatures, ensuring that the loss focuses on the most scientifically relevant portions of the scene.

to pixels with strong dust signatures, ensuring that the loss focuses on the most scientifically relevant portions of the scene.

Limits and next steps

While 3DCNN+ delivers high accuracy and speed, we observed three recurring sources of error that limit performance:

- Label imbalance: Dust pixels represent a small fraction of all MODIS samples, causing the model to under‑represent rare dust events.

- Spatial overfitting: The receptive field of three‑by‑three convolutions can miss larger‑scale dust structures that extend beyond the 5 × 5 patch.

- Limited temporal context: MODIS provides only a single snapshot per overpass; without multi‑temporal cues the model sometimes confuses dust with bright surfaces.

To address these issues, we are exploring transformer‑based architectures that can aggregate information over larger spatial extents and multiple time steps. In particular, the proposed Autoregressive Masked Autoencoder Swin Transformer (AR‑MAE‑Swin) constrains model capacity by reducing the effective Vapnik–Chervonenkis (VC) dimension by a factor ![]() , which our theoretical analysis predicts will improve sample efficiency by roughly 30%. Future work will also incorporate self‑supervised pretraining on unlabeled MODIS sequences, and will test the pipeline on other aerosol phenomena such as smoke and volcanic ash.

, which our theoretical analysis predicts will improve sample efficiency by roughly 30%. Future work will also incorporate self‑supervised pretraining on unlabeled MODIS sequences, and will test the pipeline on other aerosol phenomena such as smoke and volcanic ash.

FAQ

- Can the pipeline run on commodity hardware?

- Yes. Because the bulk of the data resides on disk and is accessed via memory‑mapping, only a small fraction needs to be loaded into RAM. Mixed‑precision training further reduces GPU memory requirements, allowing the model to run on a single modern GPU (e.g., RTX 3080) without sacrificing accuracy.

- How does the weighted loss affect dust‑storm maps?

- The weighted mean‑squared error gives higher penalty to mis‑classifications in regions with strong dust reflectance. This focuses the optimizer on the most hazardous pixels, resulting in cleaner, more reliable dust masks that align with ground‑based observations.

Read the paper

For a complete technical description, dataset details, and the full theoretical analysis, please consult the original manuscript.

Gates, C., Moorhead, P., Ferguson, J., Darwish, O., Stallman, C., Rivas, P., & Quansah, P. (2025, July). Near Real-Time Dust Aerosol Detection with 3D Convolutional Neural Networks on MODIS Data. In Proceedings of the 29th International Conference on Image Processing, Computer Vision, & Pattern Recognition (IPCV’25) of the 2025 World Congress in Computer Science, Computer Engineering, and Applied Computing (CSCE’25) (pp. 1–13). Las Vegas, NV, USA.