TL;DR

- We reviewed a large body of literature that covers multiclass classification, summarization, information extraction, question answering, and coreference resolution in legal texts.

- All papers agree on a taxonomy that links traditional machine‑learning methods, deep‑learning architectures, and transformer‑based PLMs to specific legal document types.

- Our synthesis shows that domain‑adapted PLMs (e.g., Legal‑BERT, Longformer, BigBird) consistently outperform generic models, especially on long documents.

- Key gaps remain in coreference resolution and specialised domains such as tax law and patent analysis.

Why it matters

Legal texts are dense, highly structured, and often lengthy. Automating their analysis improves efficiency, reduces human error, and makes legal information more accessible to practitioners, regulators, and the public. Across all inputs, authors stress that NLP has become essential for handling privacy policies, court records, patent filings, and other regulatory documents. By extracting and summarising relevant information, legal NLP directly supports faster decision‑making and broader access to justice.

How it works

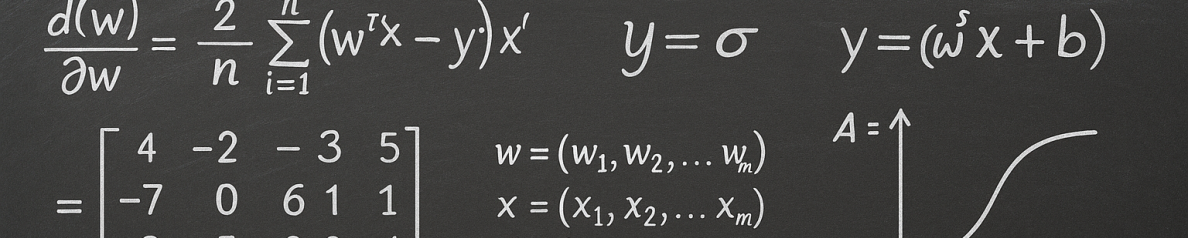

We distilled the methodological landscape into five core steps that recur across the surveyed papers:

- Task definition. Researchers first identify the legal NLP problem—classification, summarisation, extraction, question answering, or coreference resolution.

- Data preparation. Legal corpora are collected (privacy policies, judgments, patents, tax rulings, etc.) and annotated using standard schemes.

- Embedding selection. Word‑level embeddings such as Word2Vec or GloVe are combined with contextualised embeddings from PLMs.

- Model choice. Traditional machine‑learning models (SVM, Naïve Bayes) and deep‑learning architectures (CNN, LSTM, BiLSTM‑CRF) are evaluated alongside transformer‑based PLMs (BERT, RoBERTa, Longformer, BigBird, SpanBERT).

- Evaluation & fine‑tuning. Performance is measured on task‑specific metrics; domain‑adapted PLMs are often further pre‑trained on legal corpora before fine‑tuning.

This workflow appears consistently in the literature and provides a reproducible blueprint for new legal NLP projects.

What we found

Our synthesis highlights several recurring findings:

- Comprehensive taxonomy. All sources agree on a systematic mapping of methods, embeddings, and PLMs to five principal legal tasks.

- Transformer dominance. Transformer‑based PLMs, especially BERT variants, are the most frequently used models across tasks, showing strong gains over traditional machine‑learning baselines.

- Long‑document handling. Architectures designed for extended context windows (Longformer, BigBird) consistently outperform standard BERT when processing lengthy legal texts.

- Domain adaptation pays off. Custom legal versions of PLMs (Legal‑BERT, Custom LegalBERT) repeatedly demonstrate higher accuracy on classification, extraction, and question‑answering tasks.

- Benchmarking efforts. Several inputs describe unified benchmarking frameworks that compare dozens of model‑embedding‑document combinations, providing community resources for reproducibility.

- Understudied areas. Coreference resolution and specialised domains such as tax law receive relatively little attention, indicating clear research gaps.

Limits and next steps

While the surveyed work demonstrates impressive progress, common limitations emerge:

- Interpretability. Many high‑performing models are black‑box transformers, raising concerns for compliance‑sensitive legal applications.

- Resource demands. Large transformer models require substantial computational resources; lighter alternatives (DistilBERT, FastText) are explored, but often sacrifice some accuracy.

- Data scarcity in niche domains. Certain legal sub‑fields (e.g., tax law, patent clause analysis) lack large, publicly available annotated datasets.

Future research in our community should therefore focus on:

- Developing more interpretable, domain‑specific architectures.

- Extending multilingual and multimodal capabilities to cover diverse jurisdictions.

- Creating benchmark datasets for underrepresented tasks, such as coreference resolution.

- Designing efficient training pipelines that balance performance with computational cost.

FAQ

- What are the main legal NLP tasks covered?

- Multiclass classification, summarisation, information extraction, question answering & information retrieval, and coreference resolution.

- Which model families are most commonly used?

- Traditional classifiers (SVM, CNN, LSTM) and transformer‑based PLMs such as BERT, RoBERTa, Longformer, BigBird, and specialised variants like Legal‑BERT.

- Do transformer models handle long legal documents?

- Yes. Longformer and BigBird are repeatedly cited as more effective for lengthy texts because they can process longer token windows.

- Is domain‑specific pre‑training important?

- All sources agree that adapting PLMs with legal corpora (custom legal embeddings) consistently improves performance across tasks.

- What are the biggest open challenges?

- Improving coreference resolution, expanding coverage to niche legal domains, and enhancing model interpretability while keeping resource use manageable.

Read the paper

For the full details of our analysis, please consult the original article.

Quevedo, E., Cerny, T., Rodriguez, A., Rivas, P., Yero, J., Sooksatra, K., Zhakubayev, A., & Taibi, D. (2023). Legal Natural Language Processing from 2015-2022: A Comprehensive Systematic Mapping Study of Advances and Applications. IEEE Access, 1–36. http://doi.org/10.1109/ACCESS.2023.3333946