We systematically surveyed the literature to identify the most common pre‑trained models, fusion strategies, and open challenges when combining text and images in machine learning pipelines.

TL;DR

- We reviewed 88 multimodal machine‑learning papers to map the current landscape.

- BERT for text and ResNet (or VGG) for images dominate feature extraction.

- Simple concatenation remains common, but attention‑based fusion is gaining traction.

Why it matters

Text and images together encode richer semantic information than either modality alone. Harnessing both can improve content understanding, recommendation systems, and decision‑making across domains such as healthcare, social media, and autonomous robotics. However, integrating these signals introduces new sources of noise and vulnerability that must be addressed for reliable deployment.

How it works (plain words)

Our workflow follows three clear steps:

- Gather and filter the literature – we started from 341 retrieved papers and applied inclusion criteria to focus on 88 high‑impact studies.

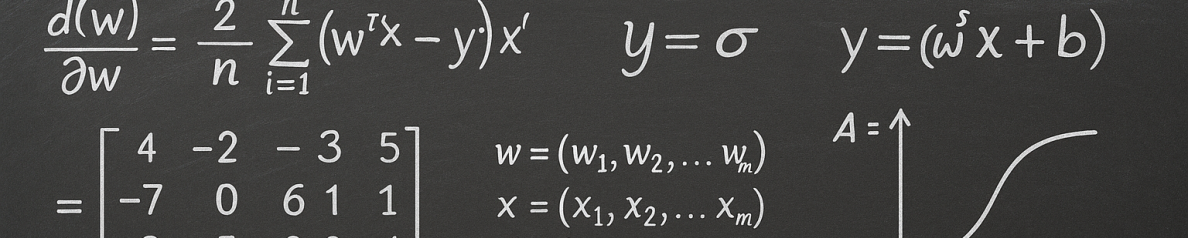

- Extract methodological details – for each study we recorded the pre‑trained language model (most often BERT or LSTM), the vision model (ResNet, VGG, or other CNNs), and the fusion approach (concatenation, early fusion, attention, or advanced neural networks).

- Synthesise findings – we counted how frequently each component appears, noted emerging trends, and listed the recurring limitations reported by authors.

What we found

Feature extraction

- We observed that BERT is the most frequently cited language encoder because of its strong contextual representations across a wide range of tasks.

- For visual features, ResNet is the leading architecture, with VGG also appearing regularly in older studies.

Fusion strategies

- Concatenation – a straightforward method that simply stacks the text and image embeddings – is still the baseline choice in many applications.

- Attention mechanisms – either self‑attention within a joint transformer or cross‑modal attention linking BERT and ResNet embeddings – are increasingly adopted to let the model weigh the most informative signals.

- More complex neural‑network‑based fusions (e.g., graph‑convolutional networks, GAN‑assisted approaches) are reported in emerging studies, especially when robustness to adversarial perturbations is a priority.

Challenges reported across the surveyed papers

- Noisy or mislabeled data – label noise in either modality can degrade joint representations.

- Dataset size constraints – balancing computational cost with sufficient multimodal examples remains difficult.

- Adversarial attacks – malicious perturbations to either text or image streams can cause catastrophic mis‑predictions, and defensive techniques are still in early development.

Limits and next steps

Despite strong progress, several limitations persist:

- Noisy data handling: Existing pipelines often rely on basic preprocessing; more sophisticated denoising or label‑noise‑robust training is needed.

- Dataset size optimisation: Many studies use benchmark collections (Twitter, Flickr, COCO) but do not systematically explore the trade‑off between data volume and model complexity.

- Adversarial robustness: Current defenses (e.g., auxiliary‑classifier GANs, conditional GANs, multimodal noise generators) are promising but lack thorough evaluation across diverse tasks.

Future work should therefore concentrate on three fronts: developing noise‑resilient preprocessing pipelines, designing scalable training regimes for limited multimodal datasets, and building provably robust fusion architectures that can withstand adversarial pressure.

FAQ

- What pre‑trained models should we start with for a new text‑image project?

- We recommend beginning with BERT (or its lightweight variants) for textual encoding and ResNet (or VGG) for visual encoding, as these models consistently achieve high baseline performance across the surveyed studies.

- Is attention‑based fusion worth the added complexity?

- Our review shows that attention mechanisms yield richer joint representations and improve performance on tasks requiring fine‑grained alignment (e.g., visual question answering). When computational resources allow, we suggest experimenting with cross‑modal attention after establishing a solid concatenation baseline.