We combine quantum Fisher information geometry with covering-number analysis to obtain explicit high‑probability generalization bounds for quantum machine‑learning models, and we explain how reducing the effective dimensionality of the parameter space leads to tighter guarantees.

TL;DR

- We bound the generalization error of quantum ML models using the quantum Fisher information matrix.

- The bound tightens as the effective dimension

drops, giving a

drops, giving a  improvement with more data.

improvement with more data. - Our approach links geometry, covering numbers, and dimensionality reduction: tools rarely combined in quantum learning theory.

Why it matters

Quantum machine learning promises speed‑ups for tasks such as chemistry simulation and combinatorial optimization. However, a model that works well on a training set may fail on new data, a problem known as over‑fitting. Classical learning theory offers tools like Rademacher complexity and covering numbers to predict over‑fitting, but quantum models have a very different parameter landscape. By translating those classical tools into the quantum domain, using the quantum Fisher information matrix (Q‑FIM) to describe curvature, we obtain the first rigorous, geometry‑aware guarantees that a quantum model will perform well on unseen inputs. This helps practitioners design models that are both powerful and reliable.

How it works (plain words)

Our method proceeds in four intuitive steps:

- Characterise the landscape. We compute the quantum Fisher information matrix for the model parameters. The determinant of this matrix tells us how “flat” or “curved” the parameter space is.

- Control complexity with covering numbers. Using the curvature information, we bound the number of small balls needed to cover the whole parameter space. Fewer balls mean a simpler hypothesis class.

- Translate to Rademacher complexity. Covering‑number bounds feed into a standard inequality that limits the Rademacher complexity, a measure of how well the model can fit random noise.

- Derive a high‑probability generalization bound. Combining the Rademacher bound with a concentration inequality (Talagrand’s bound) gives an explicit formula that relates training error, sample size

, effective dimension

, effective dimension  , and geometric constants.

, and geometric constants.

Finally, we note that many quantum circuits can be projected onto a lower‑dimensional subspace (e.g., by pruning or principal‑component analysis). Reducing ![]() shrinks the exponential term in the bound, directly improving the guarantee.

shrinks the exponential term in the bound, directly improving the guarantee.

What we found

Our theoretical analysis yields a clear, data‑dependent guarantee:

- The generalization error decays as

, with a prefactor that depends on the effective dimension

, with a prefactor that depends on the effective dimension  and a geometry term

and a geometry term  .

. - When the Q‑FIM has a positive lower bound on its determinant (i.e., the parameter space is well‑conditioned), the exponential factor

remains modest.

remains modest. - Dimensionality reduction, whether by explicit pruning, low‑rank approximations, or post‑training projection, reduces

, which tightens the bound and makes the model less prone to over‑fitting.

, which tightens the bound and makes the model less prone to over‑fitting.

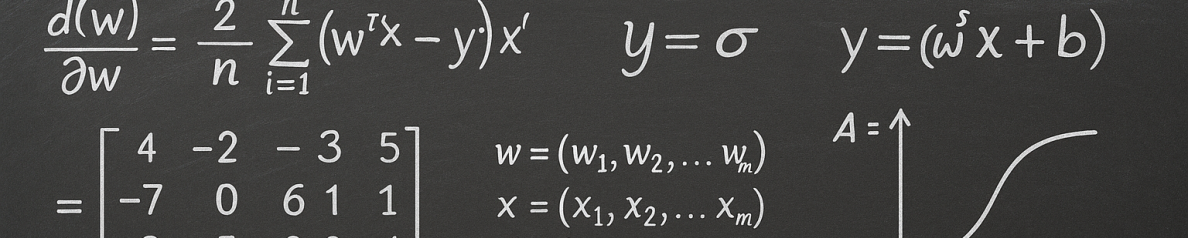

Key equation

![]()

This inequality states that the true risk ![]() is bounded by the empirical risk

is bounded by the empirical risk ![]() plus two correction terms: a geometry‑dependent term that shrinks with

plus two correction terms: a geometry‑dependent term that shrinks with ![]() and a confidence term that scales with

and a confidence term that scales with ![]() .

.

Here ![]() , where

, where ![]() is the volume of the full parameter space,

is the volume of the full parameter space, ![]() the volume of a

the volume of a ![]() ‑dimensional subspace,

‑dimensional subspace, ![]() a lower bound on

a lower bound on ![]() , and

, and ![]() a Lipschitz constant for the noisy model

a Lipschitz constant for the noisy model ![]() .

.

Limits and next steps

Our analysis relies on three assumptions that are common in learning‑theory work but may limit immediate practical use:

- We assume the loss function is Lipschitz continuous and the gradients of the quantum model are uniformly bounded.

- We require a positive lower bound

on the determinant of the Q‑FIM; highly ill‑conditioned circuits could violate this condition.

on the determinant of the Q‑FIM; highly ill‑conditioned circuits could violate this condition. - The exponential term

can become large if the parameter‑space volume or Lipschitz constant is not carefully controlled.

can become large if the parameter‑space volume or Lipschitz constant is not carefully controlled.

Future research directions include:

- Designing training regularizers that directly enforce a well‑behaved Q‑FIM.

- Developing quantum‑specific dimensionality‑reduction algorithms that preserve expressive power while lowering

.

. - Empirically testing the bound on near‑term quantum hardware to validate the theoretical predictions.

FAQ

- What is the quantum Fisher information matrix (Q‑FIM) and why does it matter?

- The Q‑FIM measures how sensitively a quantum state (or circuit output) changes with respect to small parameter variations. Its determinant captures the curvature of the parameter landscape; a larger determinant indicates a well‑conditioned space where learning is stable, which directly reduces the complexity term in our bound.

- How does reducing the effective dimension

improve generalization?

improve generalization? - All three terms in the bound become smaller when

drops. In particular, the factor

drops. In particular, the factor  shrinks, and the prefactor

shrinks, and the prefactor  scales with

scales with  . Dimensionality reduction therefore tightens the guarantee and makes the model less able to fit random noise.

. Dimensionality reduction therefore tightens the guarantee and makes the model less able to fit random noise. - Is the bound applicable to noisy quantum hardware?

- Yes. Our derivation explicitly includes a noise parameter

through the model

through the model  and shows that the bound remains valid as long as

and shows that the bound remains valid as long as  stays within a regime where the Lipschitz constant

stays within a regime where the Lipschitz constant  and the Q‑FIM lower bound

and the Q‑FIM lower bound  are preserved.

are preserved.

Read the paper

References

Khanal, B., & Rivas, P. (2025). Data-dependent generalization bounds for parameterized quantum models under noise. The Journal of Supercomputing, 81(611), 1–34. https://doi.org/10.1007/s11227-025-06966-9. Download PDF